In this article, we will look at servers, containers and why containerization has become so popular. On this journey we will also see where this technology is going and how long it will likely take to gain mainstream adoption.

Virtualization Technologies and How They Differ

In order to understand containers, we need to first understand some basic underlying principles of virtualization. Virtualization has been around for a very long time and I do not want to make a long story even longer so I am going to skip to virtualization technologies of the last decade that are most relevant to the discussion. There are and have been many different forms of virtualization and each has it’s own advantages and disadvantages. This means that there will be different use cases for different types of technology. For this discussion I will assume you understand most of the value of Virtual Machines (portability, scalability, cost savings, almost zero footprint, etc.) In order to understand containers and their value and popularity we need to understand how different types of technology gain their value. When you want many users to be able to connect to a server to use that server as a desktop you have a couple options. session based computing (aka: Terminal Server) and non-session based computing such as Virtual Desktop Infrastructure (VDI). Virtual Machines (VM) fall into this non-session based computing environment as well. Lets drill down into how these are different.

Session Based Computing

- With session based computing there is one operating system which is the server operating system (running on hardware or in a virtual machine).

- All applications on the server are running in the primary memory space.

- There is no isolation between clients. Security is done using technologies like group policy and rights limitations at the file or folder level on the server.

- No dedicated memory, CPU, disk IO or other resources are “reserved” for clients. They simply use whatever is available.

- Server class hardware with 256gb RAM and fast hard drives can usually accommodate 250 or even 500 users

- Advantages:

- On a single server you can serve many more clients than you can with non-session based computing virtualization (see disparity below for explanation)

- When you install or update applications you install or update them for all users on the system

- Applying OS and security patches are done on the server OS and are done for all users at the same time

- Management can and usually is done by policy (easy, but required)

- Disk IO is significantly lower than other forms of virtualization

- Specialized (shared) licensing are often required but are much cheaper than individual licenses

- Disadvantages:

- No (or little) physical isolation between users (security is done only through access rights and policy)

- If a rogue user has intelligence, they could “compromise” data in memory, CPU path, etc.

- Application compatibility can often be a challenge. If you have two versions of an API, application, framework that will not run on the same machine side by side, you cannot use them in a session based environment. In this scenario, all users must use the same versions of the applications. Applications are system wide, not user specific.

- You CANNOT give user administrative permissions because then they could compromise any other users session or even the entire server.

Non-Session Based Computing (eg. VDI or VM)

- With non-session based computing you have a server operating system + a client operating system for each person that connects to the server.

- The Server operating system almost always has to run on bare metal (not in a virtual machine)

- Then a desktop (or server) client is installed in a Virtual Machine on the host for each user/workload.

- No applications (usually) are running on the server. The server is dedicated to running the VMs

- All applications are installed on each client OS that needs the application.

- Memory for each client is dedicated for each client and isolated. No access to host server OS memory directly

- There is physical isolation between clients because each is running in it’s own VM.

- Server class hardware with 256gb RAM and fast hard drives can usually accommodate 50 or fewer users

- Advantages:

- Physical isolation between users (each user has their own client OS, memory, etc.)

- Application compatibility is not an issue because each user can have their own applications or application versions. Applications are user/client specific.

- You can give user Administrative permissions because it is a dedicated environment

- Disadvantages:

- On a single server you CANNOT serve nearly as many clients as session based computing (see disparity below)

- When you install or update applications you install or update them for each user on their client system

- Applying OS and security patches are done on the server OS and on each client VM (much more work and management)

- Management can and usually is done by group or local policy (not required, since each user can (and usually does) have their own dedicated VM)

- Individual licenses are needed for all applications on all clients

- Significant hard drive space is needed to account for the many installations of OS’s, and applications

- Each user requires significantly more memory (run dedicated OS, apps, etc.)

- Each client will consume significantly more CPU (dedicated OS is always working)

Compute / Memory / Disk / Disk IO Disparity

The primary difference between session and non-session based computing is the number of users/clients you can have on a single host. Let’s look at why. In a session environment you have one OS. The server OS. In non-session environment you have one for the host OS and one for each client. This client is usually Windows 10 or Windows Server. That means if you have 50 users you have 51 OS’s (host+50 users) running. The host OS is going to need a minimum of say 8gb. Then each client OS is consuming the amount of memory needed for the OS (think minimum for desktop of 2GB-6gb each). Not only memory, but each of those 50 operating systems are working (even when it is not doing anything, windows is doing plenty) system logs are being written, system checks are being performed, etc. Now add to the OS all the applications on each of the machines. That is a ton of memory and even more disk IO while the users are working (or idle ![]() ). Now let’s look at the size on disk. The single host OS is going to be a minimum of 10GB on disk. But then each of the 50 clients will also have a minimum OS size of probably 10GB (not including applications and data). This is a 500GB difference between session and non-session based computing just for the OS’s. Now add office, Acrobat, and other applications that all users are using and you have another couple gig per user in a non-session environment (in session environment apps are only installed once on the server and all users share that copy). All of this equates to session based computing being much more efficient than non-session based computing. Q: Why would anyone ever use non-session based computing? A: If they NEED a capability offered by non-session based computing. (eg. user needs admin rights to run an application).

). Now let’s look at the size on disk. The single host OS is going to be a minimum of 10GB on disk. But then each of the 50 clients will also have a minimum OS size of probably 10GB (not including applications and data). This is a 500GB difference between session and non-session based computing just for the OS’s. Now add office, Acrobat, and other applications that all users are using and you have another couple gig per user in a non-session environment (in session environment apps are only installed once on the server and all users share that copy). All of this equates to session based computing being much more efficient than non-session based computing. Q: Why would anyone ever use non-session based computing? A: If they NEED a capability offered by non-session based computing. (eg. user needs admin rights to run an application).

Containers

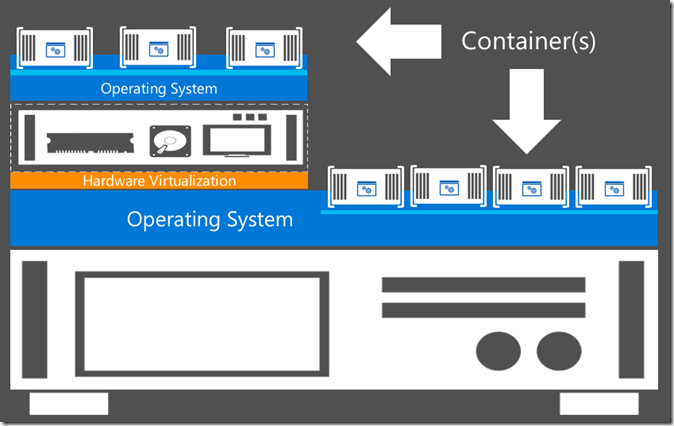

We already learned that Virtual machines are an example of non-session based computing. That is, each VM will have a DEDICATED OS. Containers are actually a hybrid between session and non-session based computing. It gives you most of the advantages of session based computing and non-session based computing without most of the disadvantages of them. All the advantages + all the isolation (containers live in user mode) + even more great advantages. Let’s dive into what containers are and how they work. First, containers have a shared OS model like session based computing. On top of that shared OS you can add any number of containers. Each container can then add runtime components, DLLs, Applications, etc. inside the container and isolated from other containers. You could as an example have a container host that has 5 (or even 500) containers running on top of it. Each of these containers would share the parent OS but could have it’s own runtime and applications. You decide how much of the the system is on the host or in each container. Sounds great right? there is one downside, since you are sharing the OS, the only real requirement is all container runtime and applications must be compatible with the container host. Containers get even better! A container host can be virtualized, it does not need to run as the host on bare metal. Since it can be virtualized and Virtual Machine OSs do not have to match the HOST OS, you could have any number of different operating systems and container solutions on one physical server.

Let’s look at some examples of how this may pan out.

- Host OS running Windows Server 2016 Datacenter is your VM Host

- Any number of containers could be running on Windows Server 2016 Datacenter (same OS)

- Add a VM to the HOST running Windows Server 2016 Nano. You could then add any number of containers running Nano on top of that VM

- Add a VM running Ubuntu X add containers on top of that and run any number of containers running Ubuntu

- Add a VM for RedHat X add containers on top of that and run any number of containers running RedHat

- Host OS running Nano

- Any number of containers could be running on Windows Server 2016 Datacenter (same OS)

- Add a VM to the HOST running Windows Server 2016 Nano. You could then add any number of containers running Nano on top of that VM

- Add a VM running Ubuntu X add containers on top of that and run any number of containers running Ubuntu

- Add a VM for RedHat X add containers on top of that and run any number of containers running RedHat

- Run a container in the cloud on Azure… Same as above You can run any number of containers on any number of OSs and gain most of the advantages of session based computing

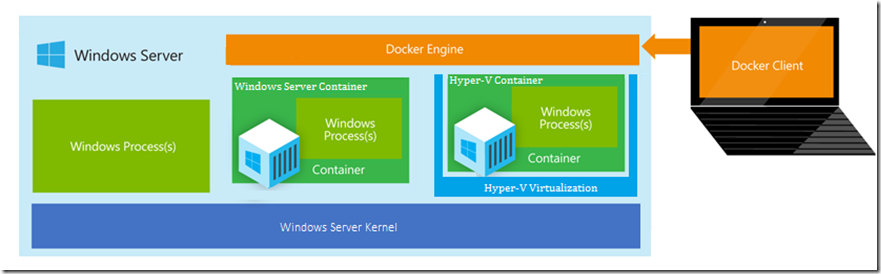

I do not want to go too deep into the many different types of containers but I do think a quick overview of the space now is appropriate. Containers can be run on top of the windows kernel (Windows Server Containers), on top of the virtualization stack (Hyper-V Container:Hyper-V containers give you hardware isolation), Inside of a VM (Container), Inside VM (Docker Container) or in the Azure cloud as a service (Azure and/or Docker Container). There are many container engines but the most popular is the Docker Engine. Microsoft has their own container engine into Azure and Windows Server 2016. It can also leverage the Docker container engine in either case. Docker was initially designed and built for Linux but in a partnership between Microsoft and Docker, you are now able to run containers using the same constructs with Windows containers. This also gives you the capabilities to similarly manage the containers. You can read much more about this on the Microsoft Server Cloud Blog.

Docker Containers

What is Docker and why are they so popular? It is not because they are first. Docker has done a brilliant job of simplifying the creation and deployment of services using containers. They have created an “App Store” if will for easily deploying already configured containers and container hosts including clusters of container hosts. They have greatly simplified the distribution of the templates and added management on top of it.

Docker plays an important part in enabling the container ecosystem across Linux, Windows Server and the forthcoming Hyper-V Containers. We have been working closely with the Docker community to leverage and extend container innovations in Windows Server and Microsoft Azure, including submitting the development of the Docker engine for Windows Server Containers as an open contribution to the Docker repository on GitHub. In addition, we’ve made it easier to deploy the latest Docker engine using Azure extensions to setup a Docker host on Azure Linux VMs and to deploy a Docker-managed VM directly from the Azure Marketplace. Finally, we’ve added integration for Swarm, Machine and Compose into Azure and Hyper-V.

“Microsoft has been a great partner and contributor to the Docker project since our joint announcement in October of 2014,” said Nick Stinemates, Head of Business Development and Technical Alliances. “They have made a number of enhancements to improve the developer experience for Docker on Azure, while making contributions to all aspects of the Docker platform including Docker orchestration tools and Docker Client on Windows. Microsoft has also demonstrated its leadership within the community by providing compelling new content like dockerized .NET for Linux. At the same time, they’ve been working to extend the benefits of Docker containers- application portability to any infrastructure and an accelerated development process–to its Windows developer community.”

Container Scale

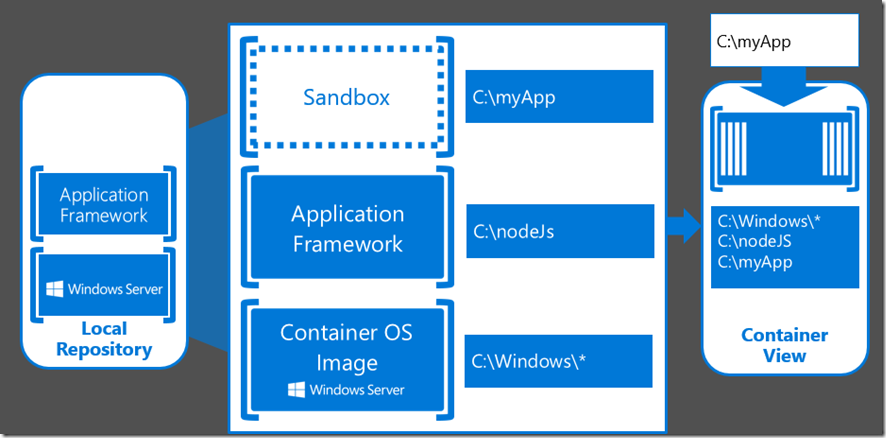

From a scale perspective, Virtual Machines changed the game. They allowed us to leverage existing hardware that was otherwise idle and put it to use by adding additional machines to the same hardware. Containers allow us to repeat those gains and add a very significant multiplier on top of it. Consider this, you a bare VM running Windows Server datacenter. That VM will be about 10gb in size. If you add a second VM running Windows Server datacenter, that is another 10gb+. each one uses has all those OS bits repeated, each one is wasting memory running the OS, Each one is generating disk IO etc. Multiply that by another 20 VMs and you see we have the same problem that we had with VDI above. There is a tone of waste and far more management. When we use this same scenario to scale with containers, we have Windows Server 2016 datacenter as the host OS (10gb) then we add a container host to the server (less than 1 gb). Now we can add a container to run on top of that which might only consume 10mb (yes mb not gb). Add another 20 containers and you are still adding less than a gb of storage but have 21 servers running in just a bit more space than one server. You could add another 100 containers on top of the same hardware. Now that you understand scale in terms of size, let’s also consider deployment. Each of those VMs could take several minutes each to deploy. Yet each of the containers will deploy (and start) in seconds. This is a game changer! This is why container technology is growing so rapidly and why containers will change the landscape in our datacenters in the coming years. A key component of this is the isolation of the container layer. You are able to “reuse” not only the host OS but also the container image. These images can be shared and used as a baseline (repository) for any other image. This additional layer of abstraction and isolation gives us almost limitless capabilities at a fraction of the cost of VMs. The application image (container view) will see everything that is running under it. It is also important to note that the application does not need to be coded to work in containers. The application does not change, just the packaging and deployment of the application. Developers are still just coding to target the destination OS like they always do. There are no container APIs that have to be hit. The magic is in the container engine.

Adoption Timeline

It is impossible to accurately predict the time it will take for containers to make it to the mainstream (almost every business has them deployed). However, we are seeing staggering adoption rates from those that understand the value of this great technology. Once Containers make it into the datacenter through the Server Operating System Windows Server 2016, the deployment rates will certainly increase exponentially. It took VMs over a decade to make mainstream. I predict in the next 5 years containers will be a primary virtualization technology being leveraged by all businesses. Stay tuned for more updates. No need to wait for Windows Server 2016 to launch to take advantage of containers or to learn about containers. You can stand up containers today in Azure using Docker. You might also want to checkout Docker Swarm on Azure Container Service Preview.

see also http://aka.ms/windowscontainers and https://msdn.microsoft.com/virtualization/windowscontainers